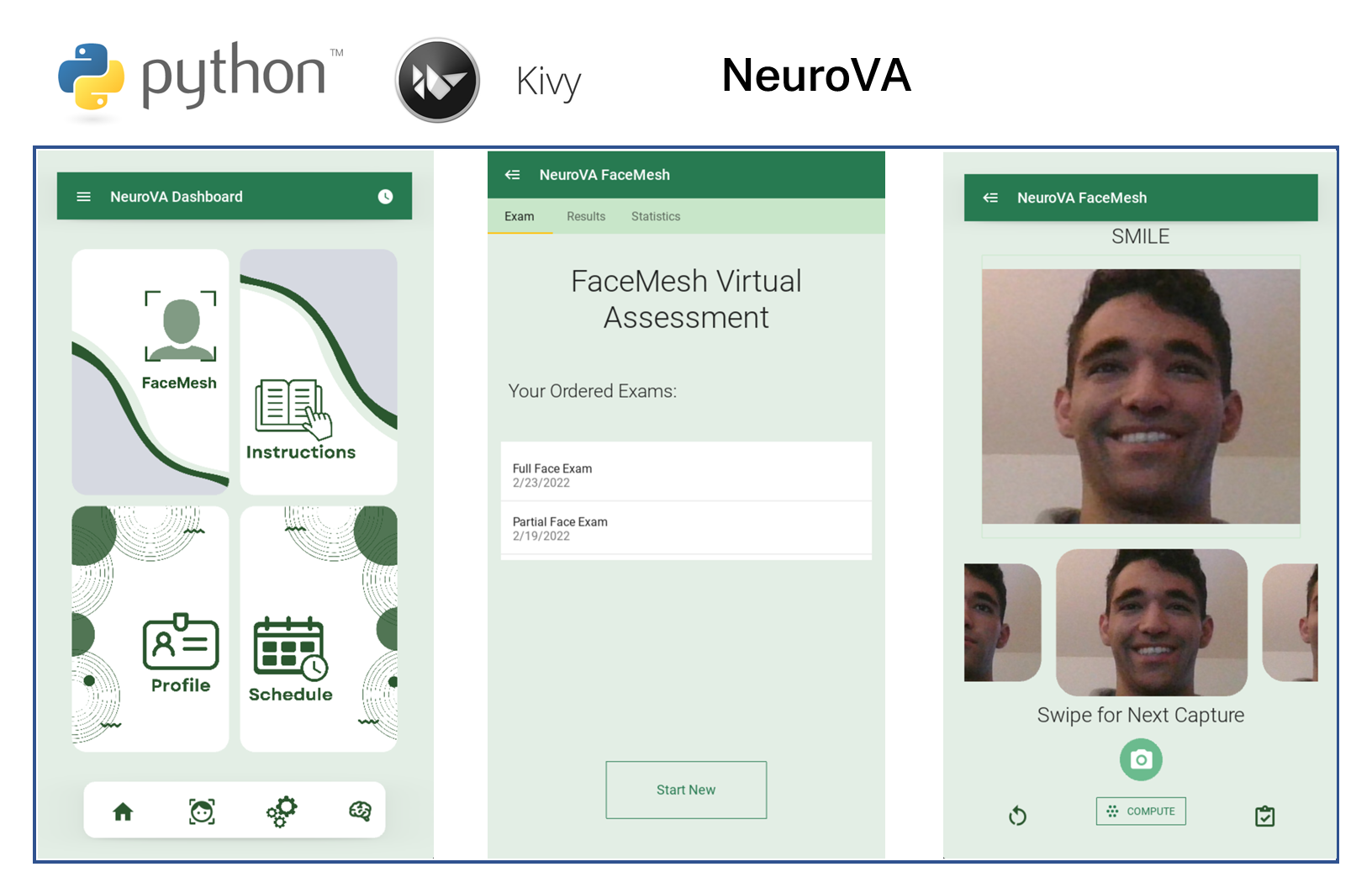

NeuroVA

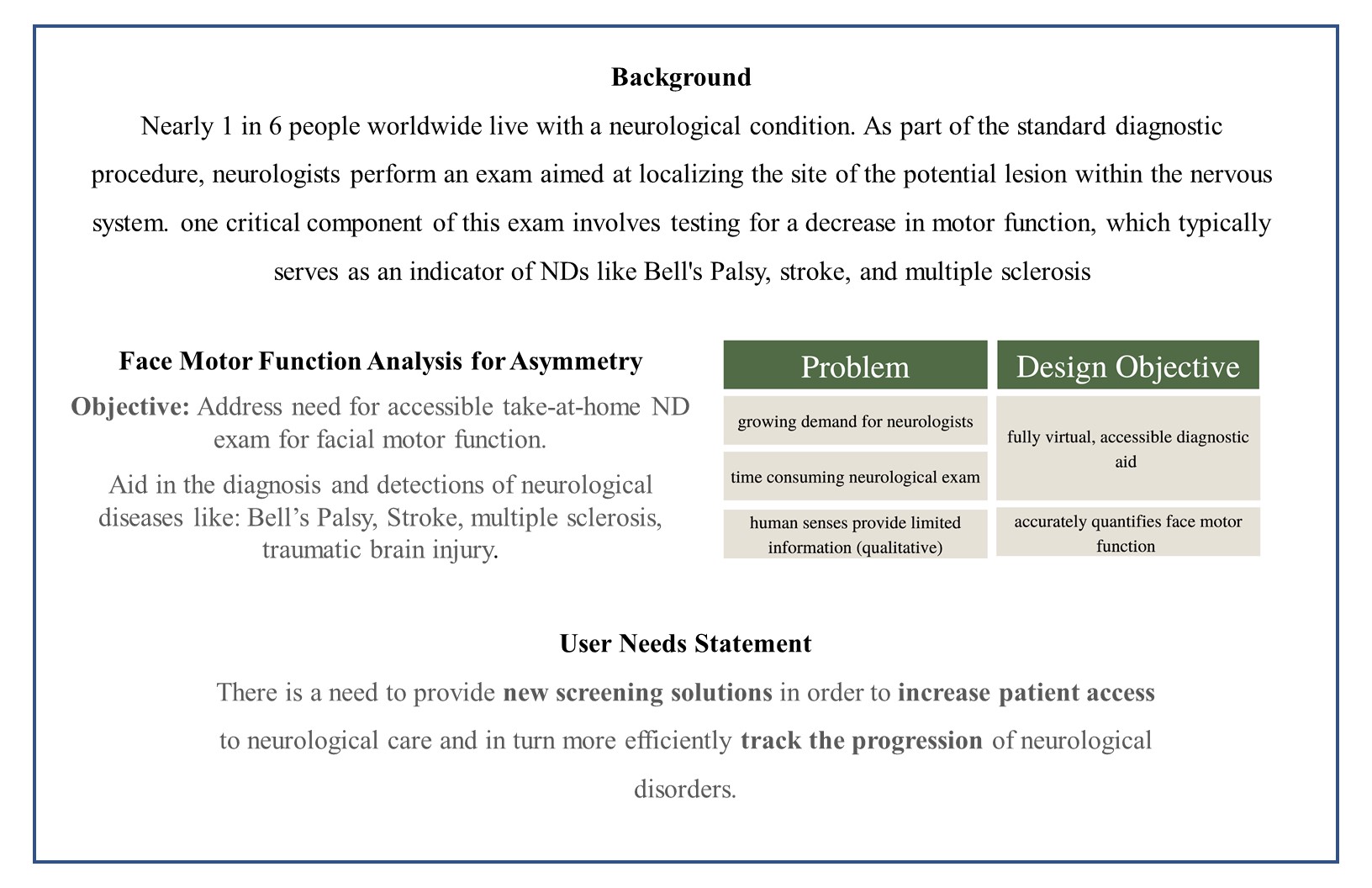

I’m working closely with my senior design team and a neurologist at UC Davis to address a series of problems neurologists clinically face in that they don't have a way to objectively gauge a patient's facial motor function.

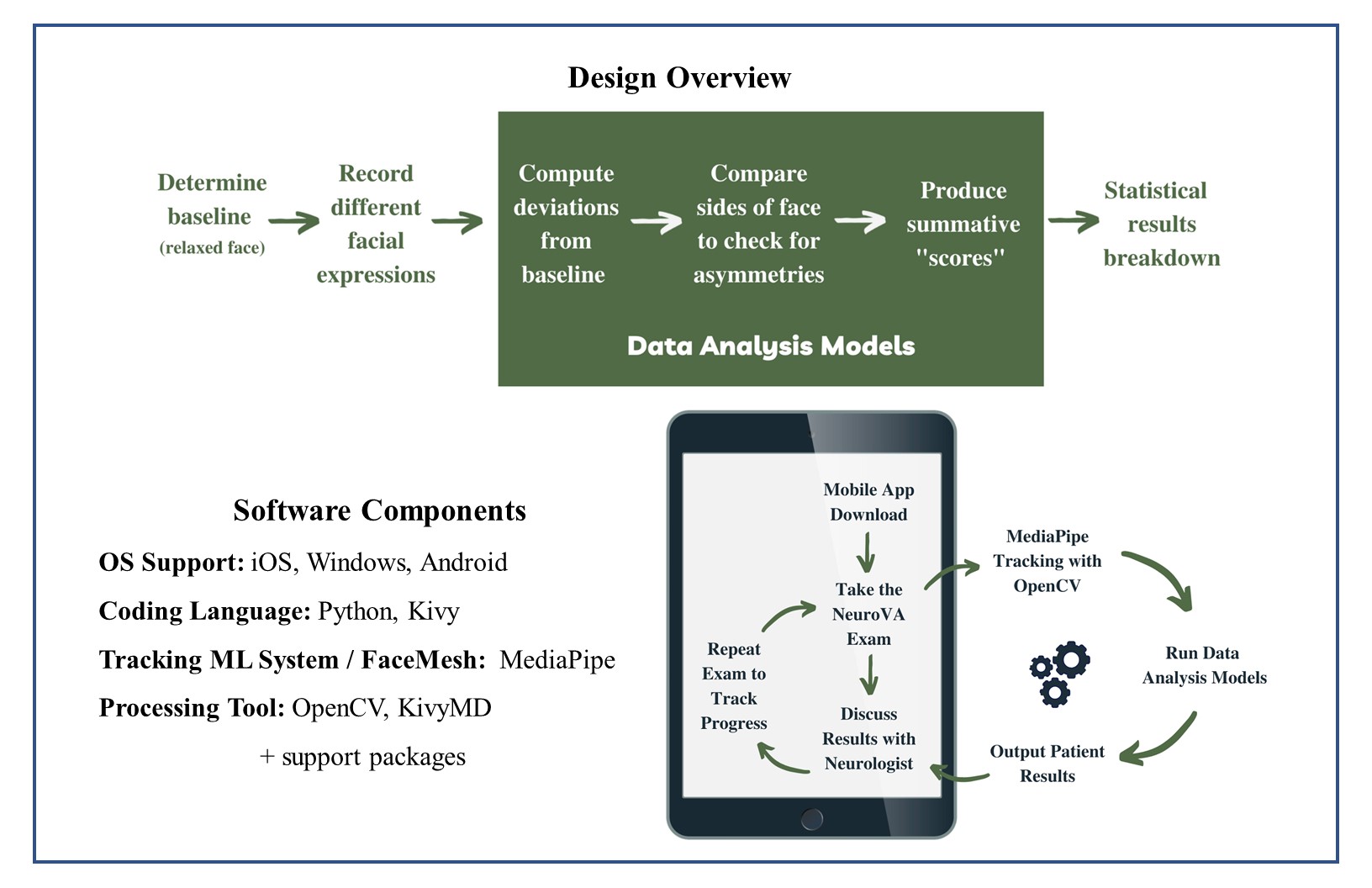

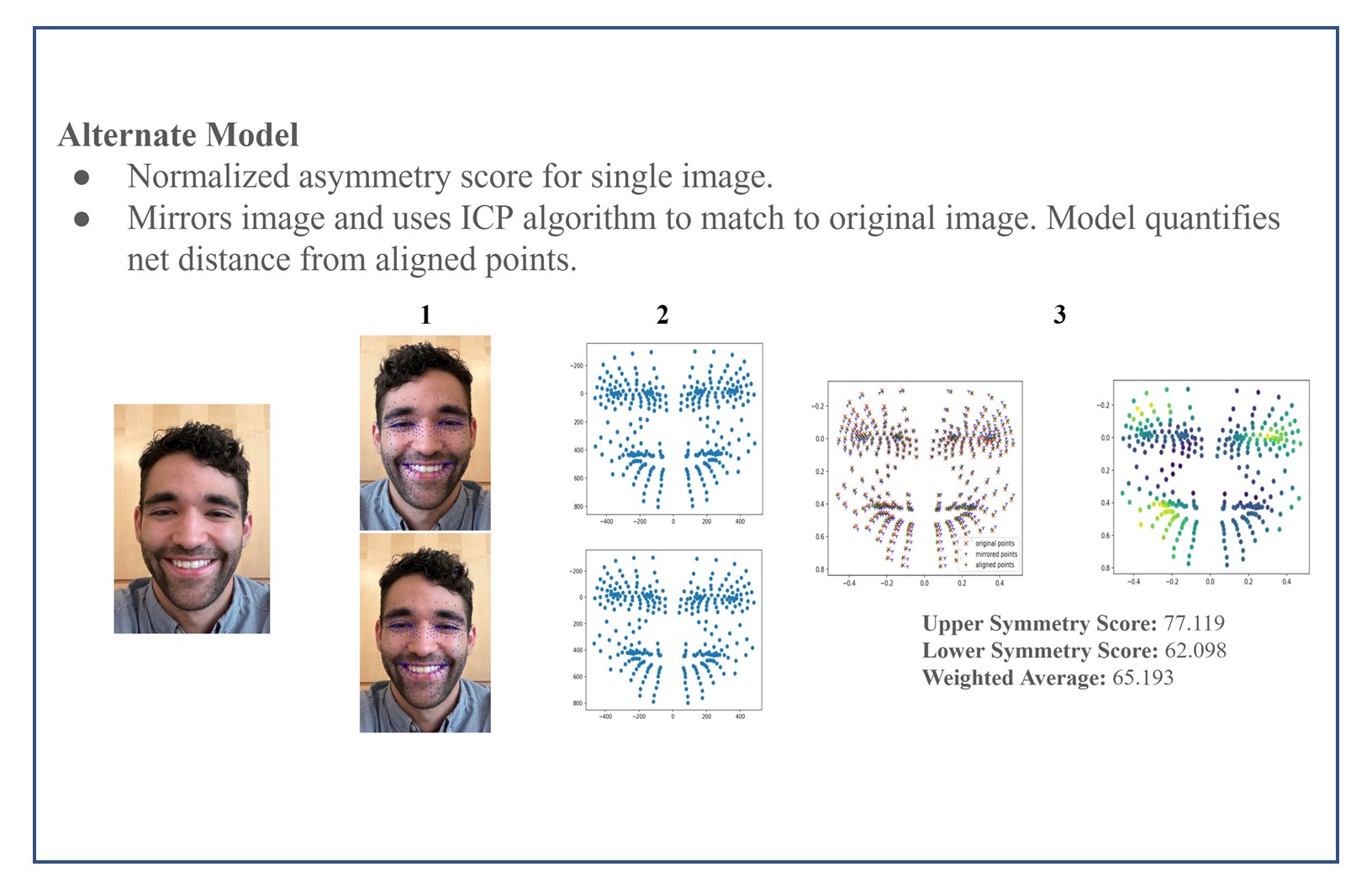

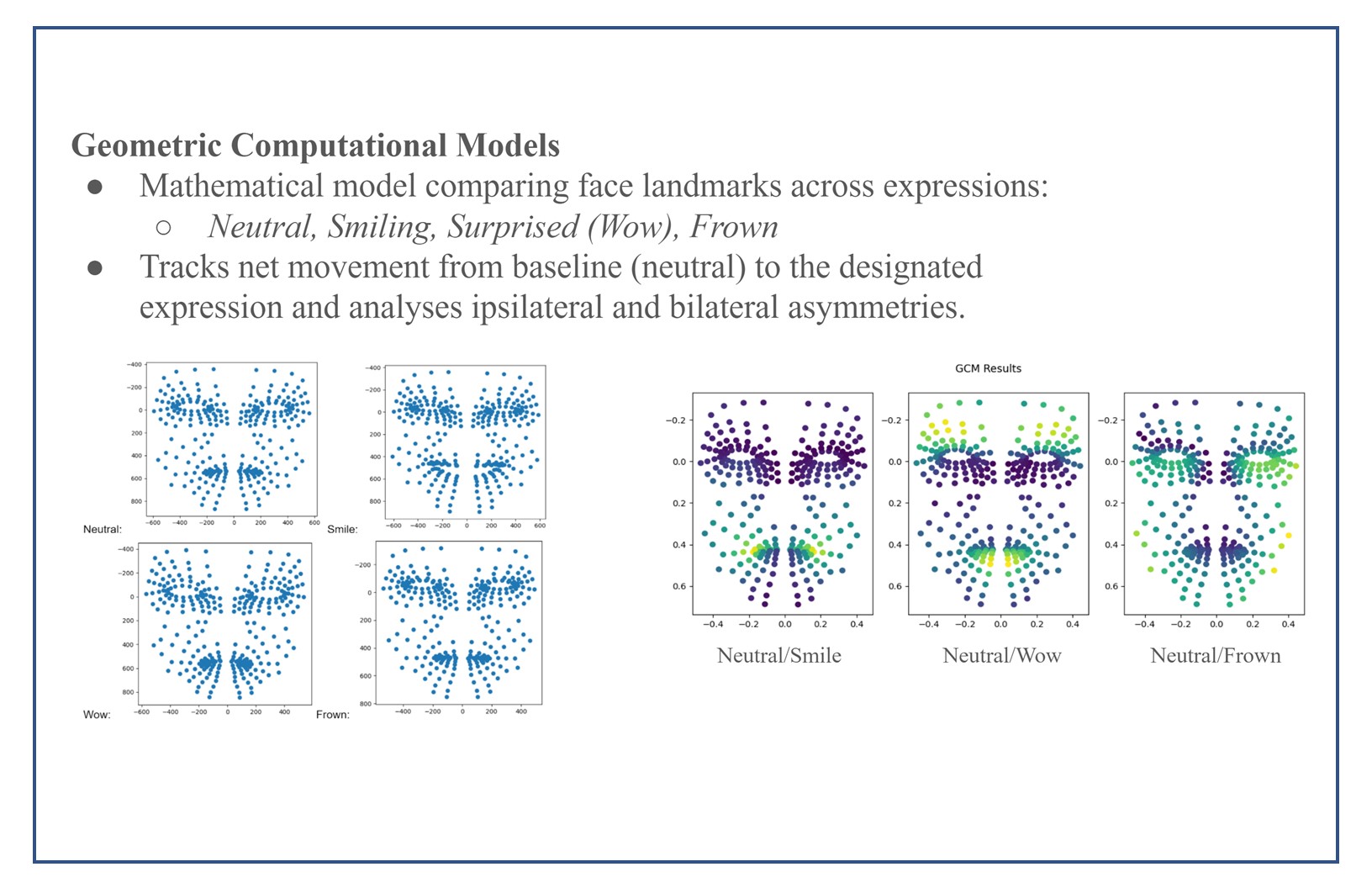

We came up with a computer vision solution that uses existing machine learning algorithms to track and map patient facial features across different expressions, and then turn these points into a comprehensive analysis that neurologists can then use to arrive at an early stage diagnosis.

I was responsible for the full stack development of the project and thus far, I have learned a lot about app development and how to execute machine learning algorithms on a user-friendly GUI. Next step is to test the application soon at the med center with actual patients, and once there is sufficient data I hope to expand our current model to include additional machine learning elements in the analysis phase.

Application is built for cross-platform support using Python and Kivy.

To view this project on Github, click on the icon below to be directed to the source code.